#HOW TO USE EVGA PRECISION X OVERLAY MANUAL#

Remember the speed/voltage curve we alluded to earlier? Manual overclocking merely serves to put the GPU on a different, higher speed/voltage plane. The EVGA utility provides an improbable 549MHz offset. Add on 50MHz and everything is shifted 50MHz up, including potential GPU Boost frequencies. Well, one has to use an approved overclocking utility and manually increase the GPU offset. With all this auto-overclock malarkey, how is the honest-to-goodness enthusiast supposed to overclock the card, and what are partners to do if they want to release custom-designed, factory-overclocked models?

Clever, eh? Again, though, you won't know it's happening. This also means power is reduced, as well.

The aforementioned chip monitors game load and should it determine that a low-intensity scene doesn't benefit from the GPU running at its nominal 1,0006MHz clock, the core frequency and voltage is reduced. Working on a pre-determined curve that marries voltage with frequency, GPU Boost can also reduce the core/shader speed and associated voltage when there's no reason to run at 1,006MHz. We'll leave it up to you to decide if this is a good move or not. NVIDIA, however, says that GPU Boost cannot be switched off: the GPU will increase speed no matter what you do. You may suppose an easy way around this is to switch off GPU Boost. This behind-the-scenes overclocking also means that two cards, in two different systems, can produce results that may vary by more than we'd like: a benchmarking nightmare. The two pictures show the GTX 680 operating at 1,097MHz and 1,110MHz for Batman: Arkham City and Battlefield 3, respectively. The only method of discerning the clockspeed is, currently, to use EVGA's Precision tool, which provides an overlay of the frequency. As an example, the GPU boosts to 1,084MHz with 1.150V and 1,110MHz with 1.175V: the increase is tied to a voltage jump, too. After examining numerous popular real-world games, NVIDIA says that every GTX 680 can boost to, on average, a 1,058MHz core/shader speed, with the GPU running up to 1,110MHz for certain titles. Should there be scope to do so, as explained above, the GPU boosts in both frequency and voltage, along pre-determined steps, though the process is invisible to the end user. This PCB-mounted microprocessor polls various parts of the card every 100ms to determine if the performance can be boosted. Point here is that the card's performance can be increased in many games without breaking through what NVIDIA believes to be a safe limit, which, by default, is the 195W TDP. Switch to 3DMark 11 and the benchmark is significantly more stressful, enough to hit the card's TDP limit. Games such as Battlefield 3 don't exact a huge toll, with the GPU functioning at around 75 per cent of maximum power. You see, not all games stress a GPU to the same degree we know this when looking at the power-draw figures for our own games. Just like Intel, NVIDIA wants to boost performance when there is TDP headroom to do so, given conductive temperatures, etc. The GTX 680 has a dedicated microprocessor that amalgamates a slew of data - temperature, actual power-draw, to name but two - and determines if the GPU can run faster. Now, GTX 680 core and shader-core clock is 1,006MHz, but this is not the frequency it will operate at during most gaming periods.

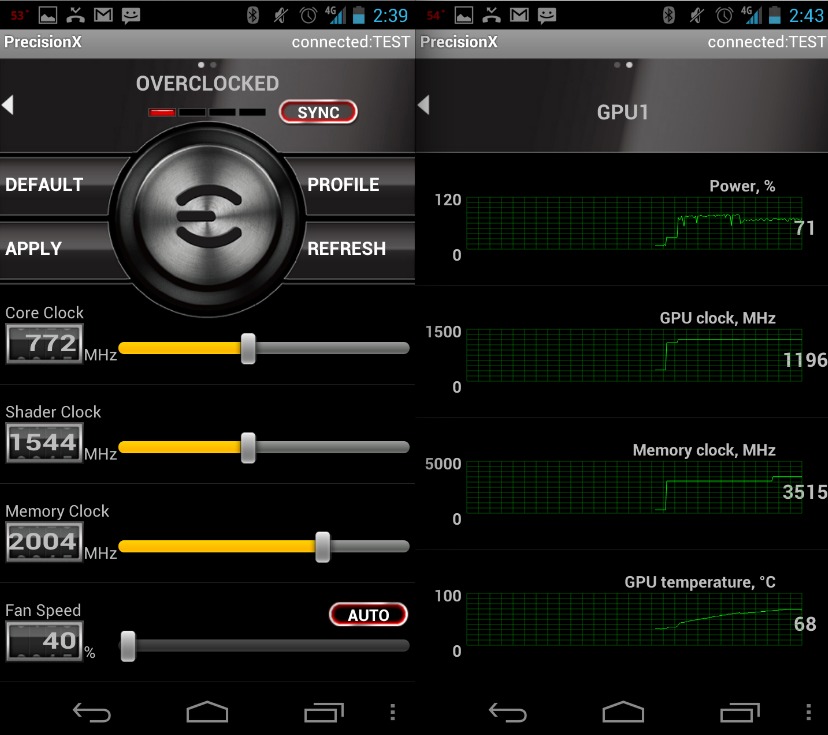

Any GPU engineer will tell you that a modern GPU has multiple clock domains, but for our purposes, let's consider GTX 580 to operate with a general clock of 772MHz and shader-core speed of 1,544MHz. NVIDIA's previous GPUs have run at one set speed, determined by the class of card, with, in recent times, the shader-core operating at twice this base clock. Taking a leaf out of Intel's book, NVIDIA is implementing a frequency-boosting feature called GPU Boost, and it needs some explainin'.

0 kommentar(er)

0 kommentar(er)